Too Long, Must-read

The [Live] Multi-cloud-native architecture can deliver a 10X better TCO than the other variants. Some other unique insights we found –

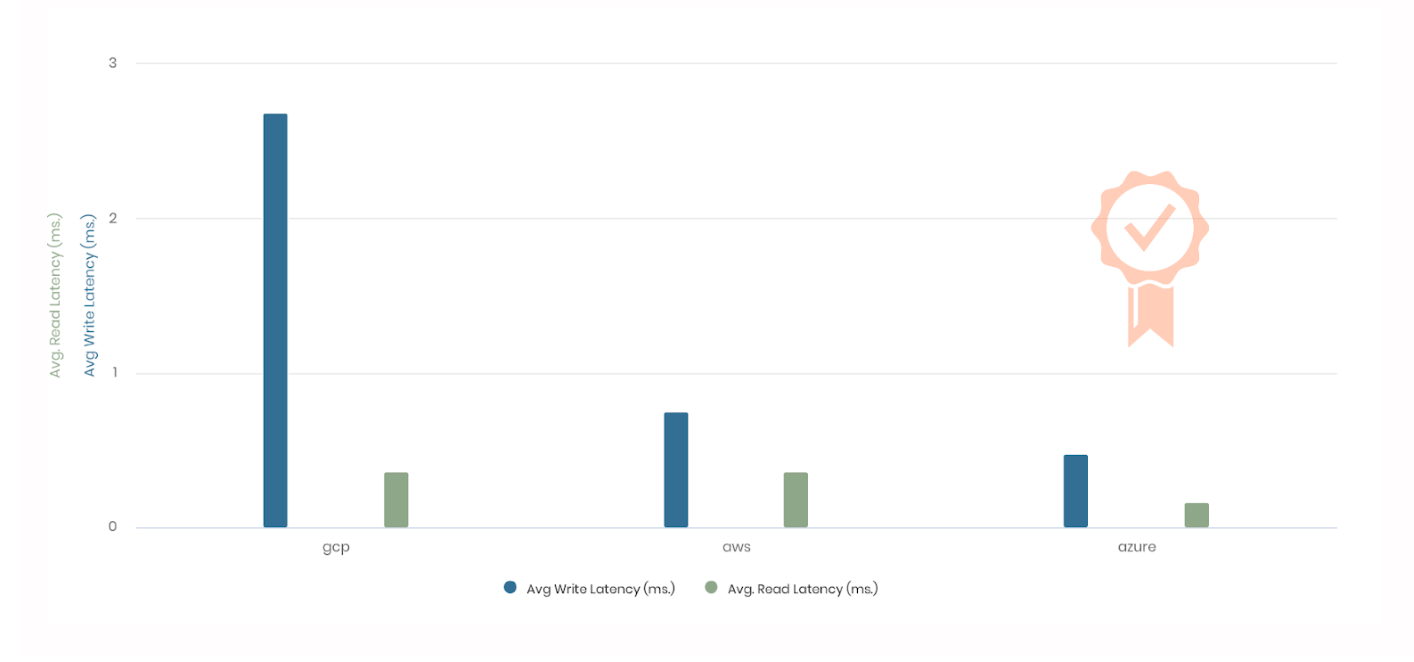

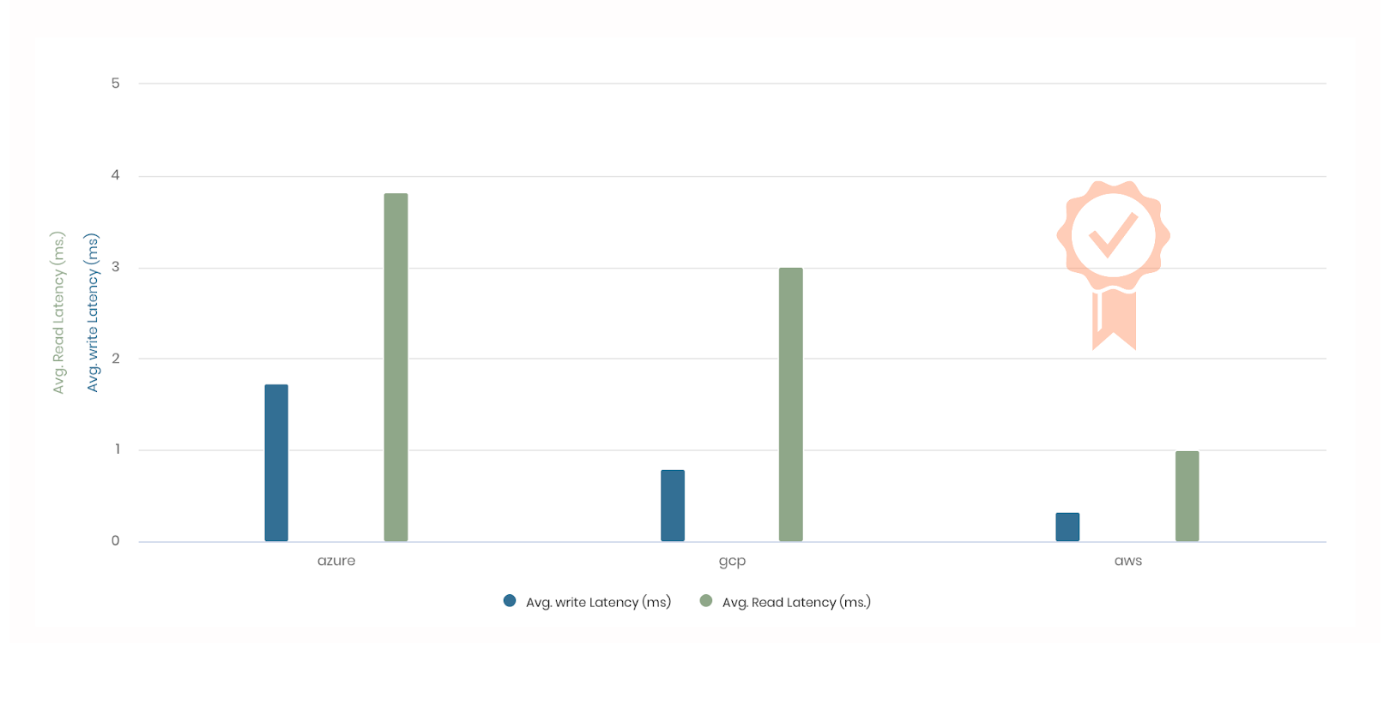

- Azure’s NVMe drives beat AWS and GCP by a significant amount w.r.to latencies.

- AWS delivered the most respectable IO latencies when using the best network-attached storage.

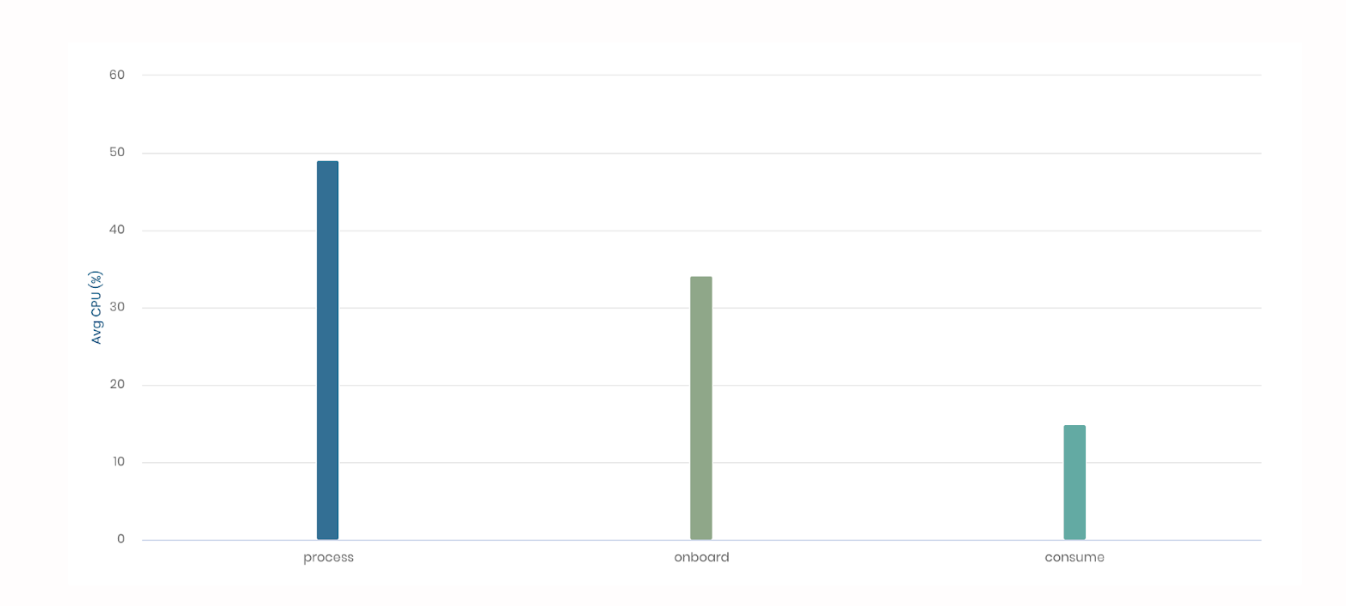

- bi(OS) flips compute utilization for OLAP use cases – processing consumes most, followed by onboarding and then consumption.

Use Case

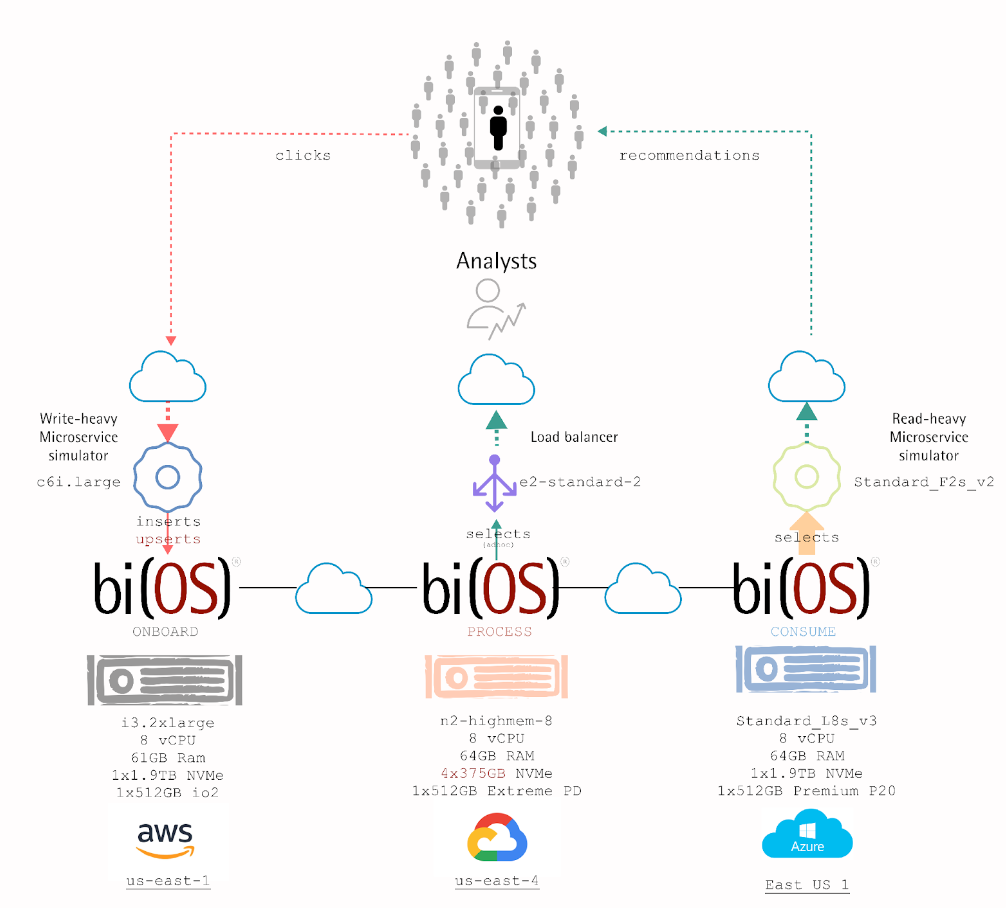

Imagine an e-tailer planning for Black Friday 2022. Their target audience is the 50M+ consumers on the eastern seaboard of the United States. Even a minute’s downtime isn’t acceptable. At the same time, costs need to be at par (or lower) than a single-cloud deployment. And the architecture should scale out with no single point of failure. Imagine this e-tailer has to deliver in-session personalization for anonymous users. Based upon our experience working with Global Enterprises and Unicorns, we modeled the following read-write pattern –

- Product Views, Add-to-cart, and Orders are written in real-time to bi(OS) by the customer’s micro-services.

- These raw events are read by a microservice going back for the past few days. In other words, we modeled an “unbounded table” where data is appended to and read from, in a sliding-window manner. Further, for these reads, we did not assume any clever tricks of indexing or aggregations.

- Business users are reading micro-aggregates in real-time and making instantaneous decisions.

Setup

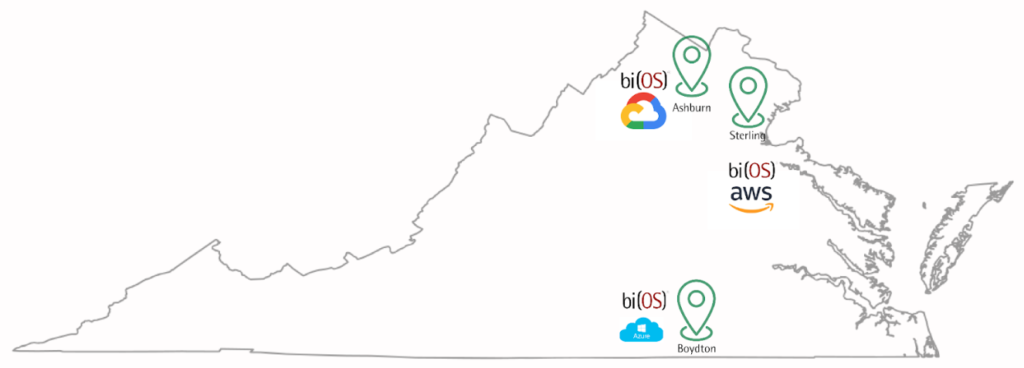

bi(OS) was deployed in a multi-cloud manner across regions of the Big 3 in Virginia, US. This was the most common region that had availability for the resources required in our test.

We attempted to keep the configuration consistent across the Big 3 with the following rules –

- Aim for 8GB of RAM per vCPU and use 8 vCPU machines

- Use local SSDs for data; bi(OS) provides the redundancy across host, rack, DC, and Cloud

- Use a single high-performance network attached disk of 512GB for logs

- Up to 10Gbps of network IO bandwidth

We ran a sustained load of ~1000 (inserts + upserts + selects) / second. Inserts and Upserts were done 1 row at a time while selects read ~500 rows in every call. The load was run for 9+ hours and during this time no aspect of the system was saturated.

Insights

- Read and Write Latencies of NVMe Drives – Azure wins.

- Read and Write Latencies of network-attached high-performance storage – AWS wins.

- bi(OS) flips compute utilization on its head for OLAP use-cases.

Conclusion

Conclusion

-

We tested the Big 3 using the [live] multi-cloud deployment mode of bi(OS). The test created a ton of data w.r.to price-performance comparisons. We plan to analyze all of these and produce a thorough report in the coming days. In summary, the Cloud confusion is real, and Isima is here to help. This system will be showcased at the premier multi-cloud conference in a few weeks. Do join us to experience it for yourself and be amazed. If you can’t wait, sign-up and go live in days!